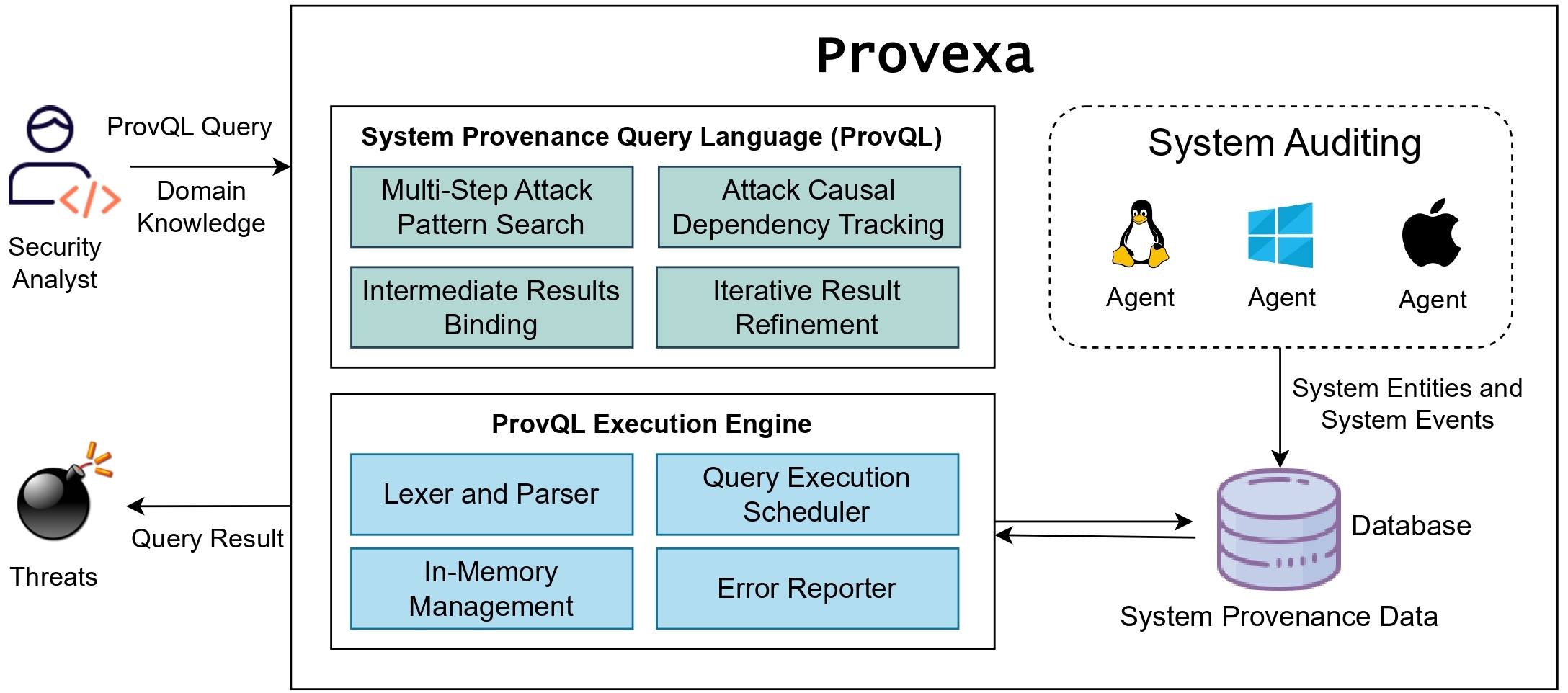

Provexa is a human-in-the-loop investigation platform designed to uncover sophisticated, multi-stage cyberattacks by analyzing massive volumes of system audit data. At its core lies ProvQL, a powerful domain-specific language tailored for security analysts working over system provenance graphs.

The architecture comprises lightweight system agents that collect OS-level events (file, process, and network interactions), which are then parsed and stored in graph or relational databases. These events are transformed into provenance graphs where nodes represent system entities and edges represent causal event relationships.

Analysts interact with Provexa via a notebook-style UI that enables constructing queries, visualizing results, and progressively refining hypotheses. Two core primitives support investigation:

The domain-aware query engine intelligently schedules subqueries for optimized performance, and an in-memory management supports fast, iterative analysis. This architecture empowers analysts to stay focused on what matters — surfacing relevant attack behaviors without wading through irrelevant noise.

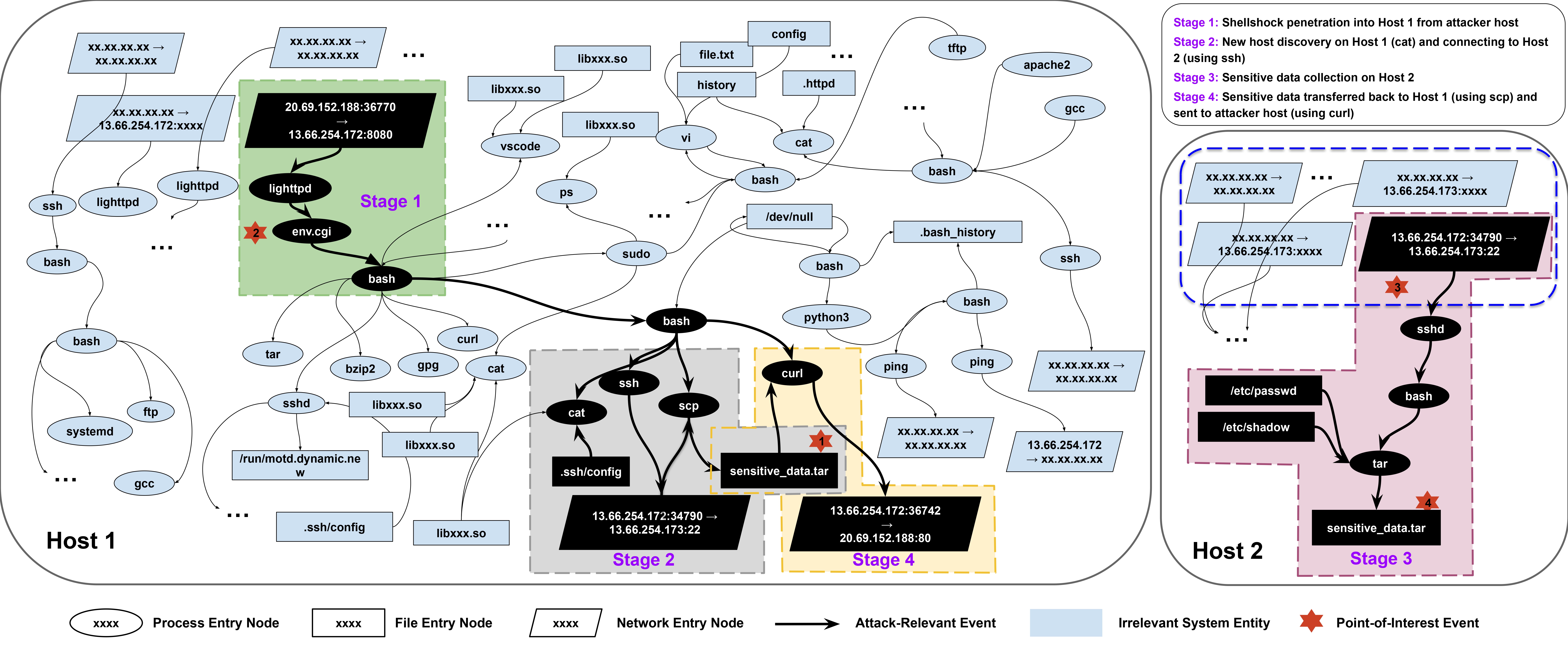

System dependency graphs for a multi-stage, multi-host data leakage attack. The combined dependency graphs of the two victim hosts contain 100,524 nodes and 154,353 edges. The attack-relevant nodes and edges, highlighted in dark black, comprise only 20 nodes and 20 edges, indicating the significant challenge of finding a needle in a haystack.

Sophisticated cyberattacks like APTs unfold over multiple hosts and stages, often blending into normal activity. Traditional forensic tools fail to cope due to:

Provexa solves this. We demonstrate how ProvQL is used to investigate the data leakage case in the figure above.

curl) that reads a *.tar file and immediately sends it over the network. The result from the search confirms data exfiltration involving sensitive_data.tar. The result is stored in poi1 for further analysis.

poi1 on Host 1 to find the origin of the sensitive_data.tar file, filtering out benign processes like vscode. The results reveal that an scp process created the file by copying it from Host 2, confirming remote data transfer.

We backtrack the creation of sensitive_data.tar on Host 2. The query reveals a tar process packed /etc/passwd and /etc/shadow into the archive, confirming the data collection phase of the attack.

We trace backward from the curl process on Host 1 to uncover the attack's entry point. Non-critical activity like ping is excluded. The resulting graph, stored in g3, helps isolate the origin of the malicious process.

We search the in-memory graph g3 for events involving the attacker’s IP 20.69.152.188. The query reveals a lighttpd process that reads from this IP, suggesting the attacker exploited a web server vulnerability to compromise Host 1. The result is saved in poi2.

We merge g1 and g3 to build a comprehensive view of the attack on Host 1 (g4), then perform a forward trace from the entry point poi2 within this graph. Filtering out irrelevant nodes like cat, the resulting trace (g5) captures the critical path from entry to exfiltration.

The dark paths shown in Fig. 1 visualize the output of g5, capturing the key attack steps with minimal noise. This showcases how ProvQL enables efficient, step-by-step investigation of complex, multi-stage attacks.

For more details on attack scenarios, example queries, and experimental results, check our full appendix page:

View Full Appendix →

@article{tsegai2025provexa,

title = {Enabling Efficient Attack Investigation via Human-in-the-Loop Security Analysis},

author = {Tsegai, Saimon Amanuel and Yang, Xinyu and Liu, Haoyuan and Gao, Peng},

year = {2025},

issue_date = {July 2025},

journal = {Proceedings of the VLDB Endowment},

volume = {18},

number = {11},

doi = {10.14778/3749646.3749653},

pages = {3771-3783},

}